Forecast evaluation

Importance of evaluation

- Because forecasts are unconditional quantitative statements about the future (“what will happen”) we can compare them to data and see how well they did

- Doing this allows us to answer question like

- Are our forecasts any good?

- How far ahead can we trust forecasts?

- Which model works best for making forecasts?

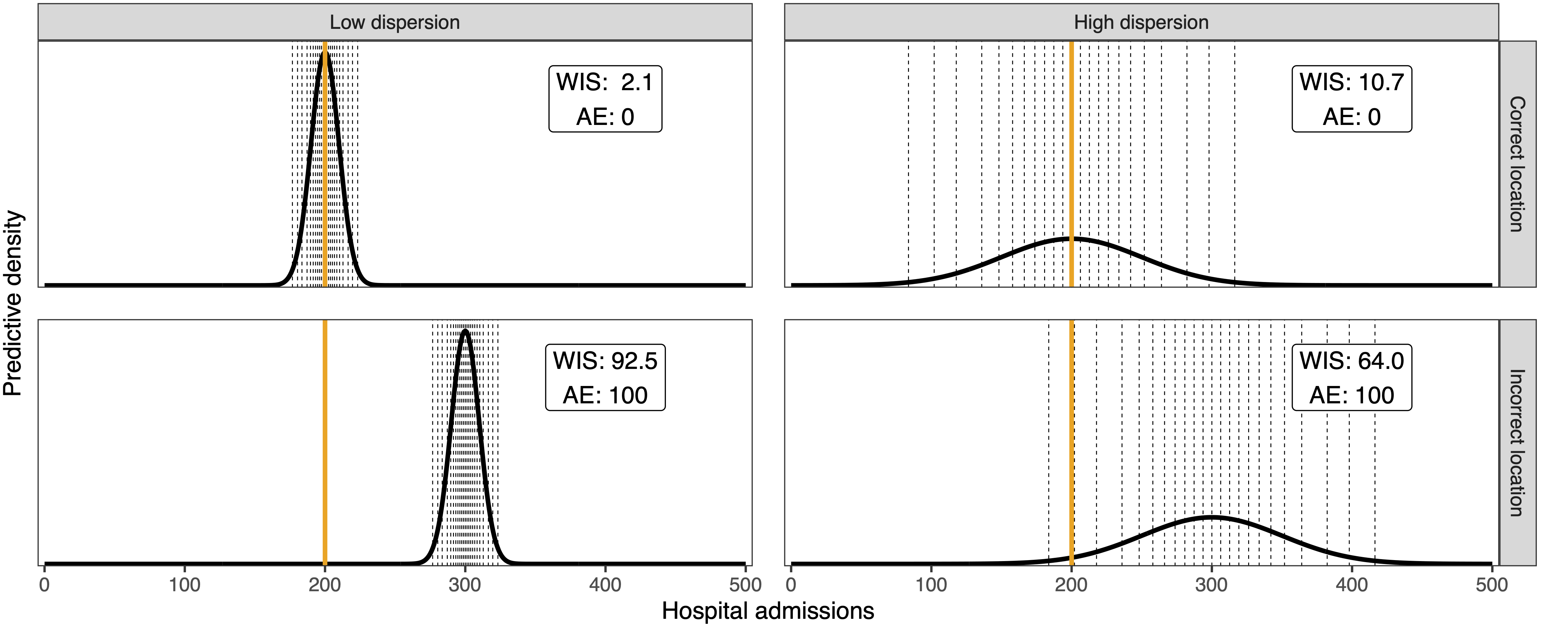

- So-called proper scoring rules incentivise forecasters to express an honest belief about the future

- Many proper scoring rules (and other metrics) are available to assess probabilistic forecasts

The forecasting paradigm

Maximise sharpness subject to calibration

- Statements about the future should be correct (“calibration”)

- Statements about the future should aim to have narrow uncertainty (“sharpness”)

Figure credit: Evan Ray

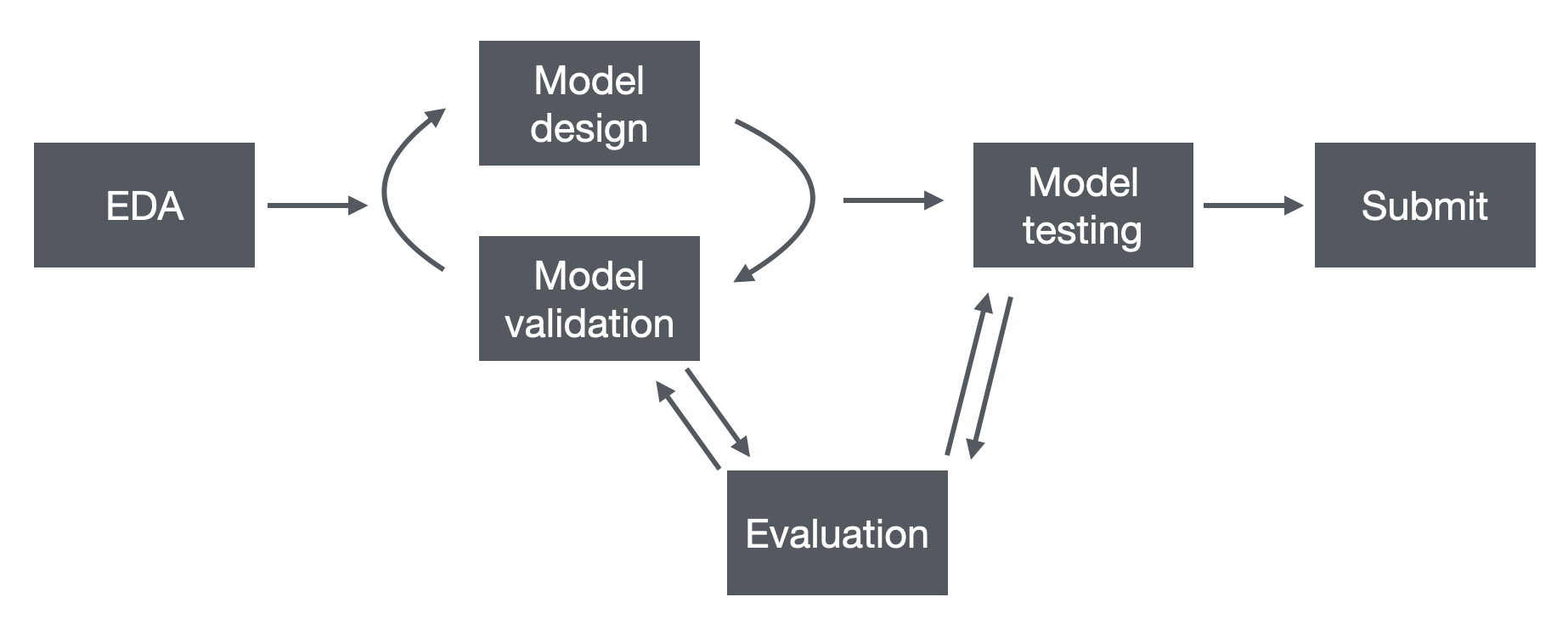

Forecast workflow

Evaluating forecasts

- Vibes: Do the forecasts look reasonable based on recent data?

- Scores: Measure the forecast accuracy. Different scores will measure important different facets of performance.

- Testing and validation: Design an experiment that evaluates forecasts made at different times, without “data leakage”.

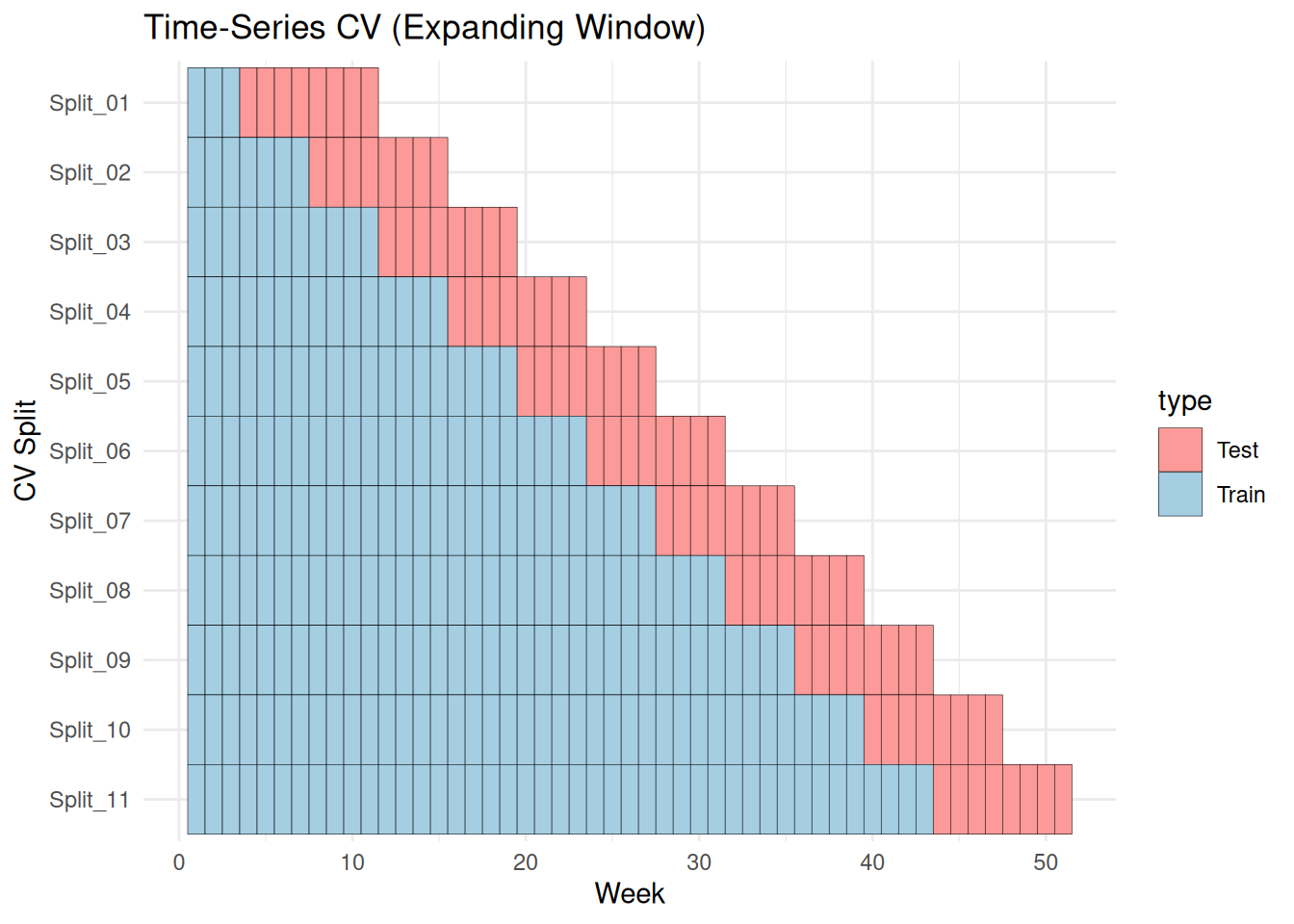

Don’t let the model cheat: cross-validation

Picking a cross-validation scheme that is specific to time-series data ensures that your model only sees data in the past.

Time-series cross-valiation schematic

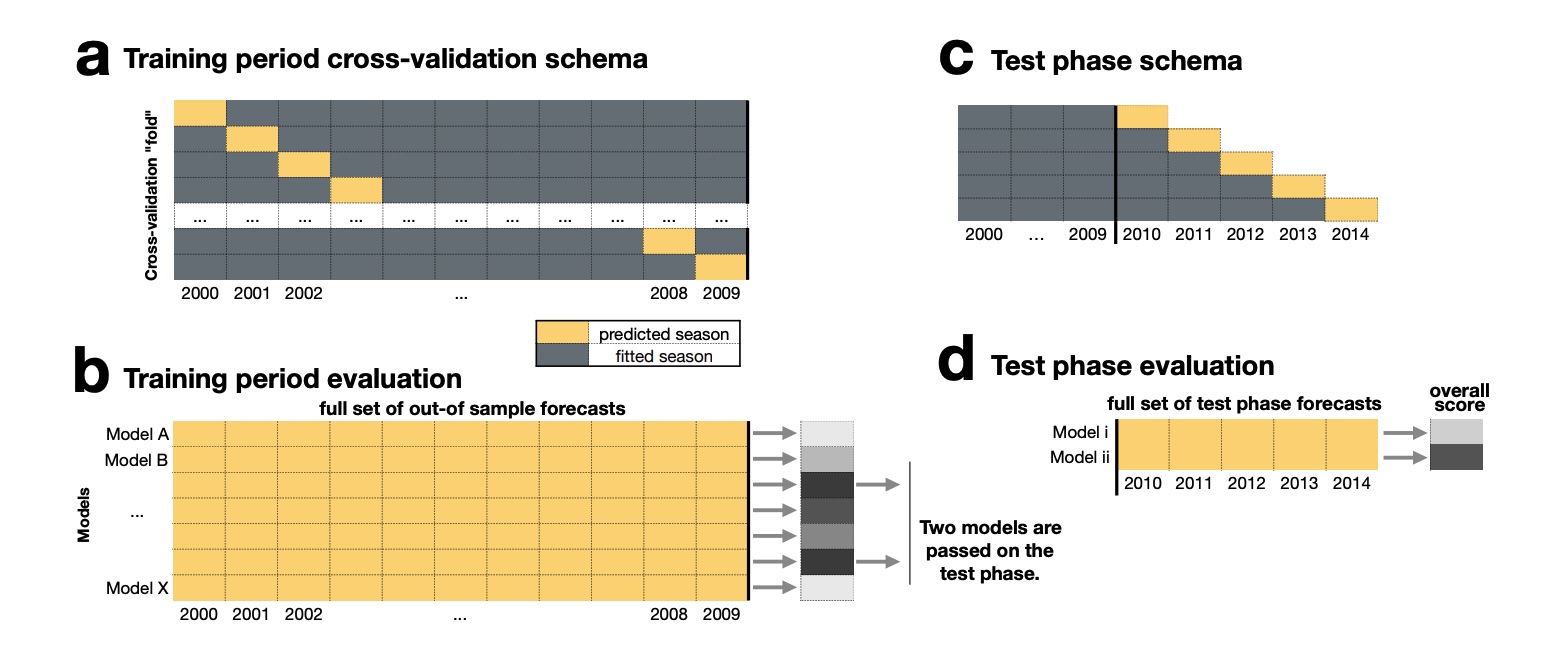

Don’t let yourself cheat: prospective validation

Prospective validation is when you “register” one (or a small number) of forecasts as your “best” prediction of the future, before the eventual data are observed. If you know what the held-out data looks like, you might make subconscious decisions about which model to choose, biasing the final results.

Figure credit: Lauer et al, 2020

Validation with epi data

It can be really hard to keep track of what version of epi surveillance data was released when.

This makes prospective evaluation even more important.

For any retrospective experiment, you should always look for evidence that an analyst didn’t let the model cheat!

Your Turn

- Build and visualize forecasts from multiple simple models.

- Evaluate the forecasts using proper scoring rules.

- Design and run an experiment to compare forecasts made from multiple models.

- Evaluate forecasts across forecast dates, horizons, and other dimensions.

Forecast evaluation